Stop selling files: The case for streaming tensors in GeoAI

Rasteret sped up geo image reads by 10x. But users still spend 80% time doing ETL before even feeding GPUs. Its like having a fast car on roads full of potholes. We investigate why, and share our solution

Intro

Last year we made Rasteret library, which sped up access to Earth Observation (EO) images by 10x, while doing zero copy of the original data. We honestly thought this solves a lot of problems. But we realized users were still spending 80% of their time doing ceremonies before feeding the GPUs with EO images: surfing STAC catalogs, filling out forms to download images, drawing polygons on maps and waiting for email links, trying to find RAI information, all this is still happening in the age of cloud-native formats and AI agents.

Its like we built a faster car for everyone, but the roads are still full of potholes. So we set out to see why

The gap for EO imagery

2025 was the year Defense "ate" everything. Governments signed 9-figure checks for dedicated satellite constellations, and end-to-end intelligence systems that are proprietary.

honestly? This is good, it shows they buy tangible outcomes no matter the cost.

But what about the rest of the commercial markets like insurance, agriculture, supply chains, real estate? Commercial EO as an industry, whether its raw images from space or insights from sophisticated science labs, faces fractures when trying to work as one system, between open data standards, and special requirements.

Tabular geospatial data (vectors) is already moving to standards like Parquet and Iceberg, while images from satellites and drones are still tricky to work with. They are similar to data in other domains like medical imagery, machinery CAD models which are also hard to put into data analytics for multimodal science.

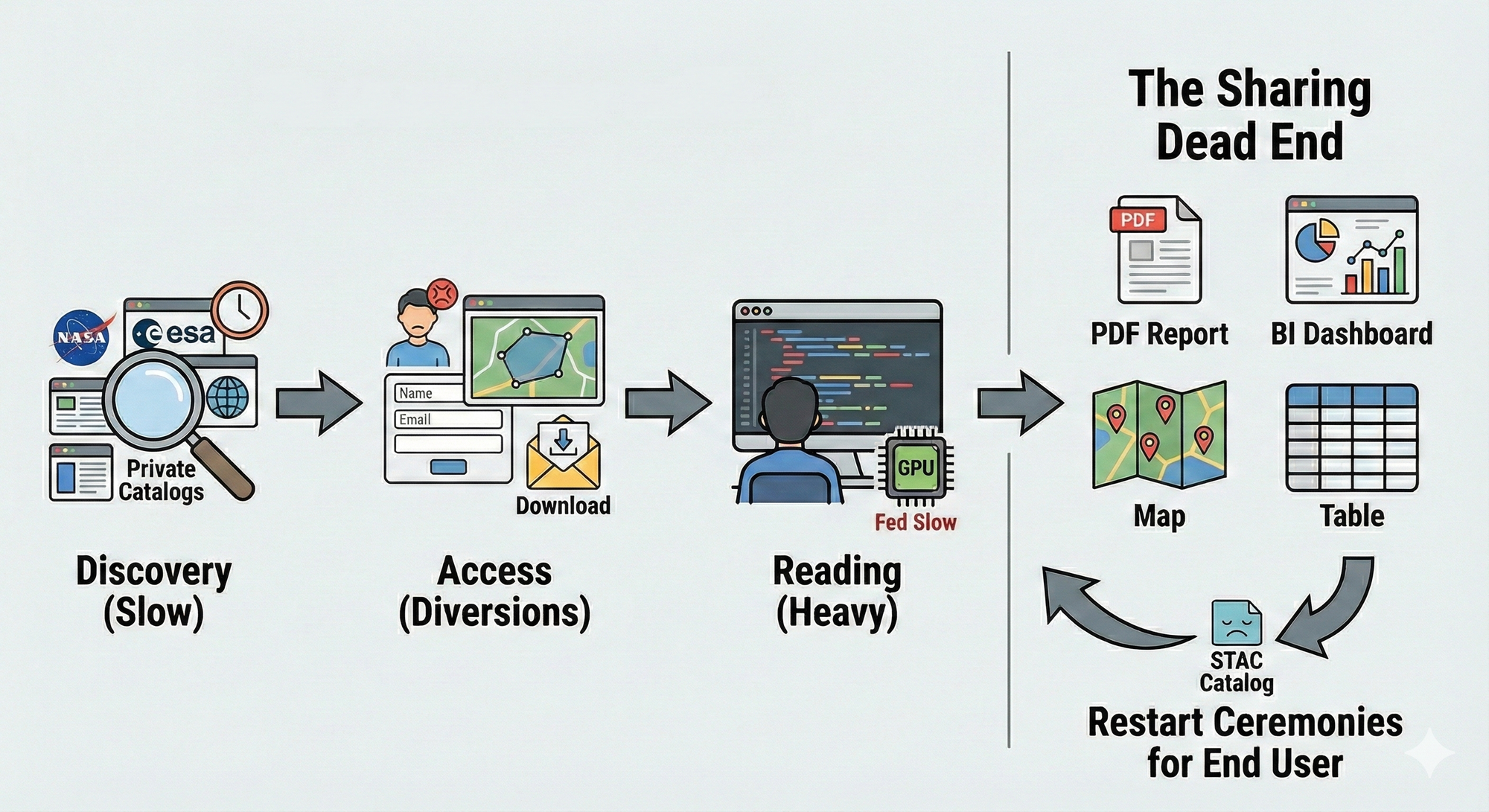

The ceremonies to face

If you are a ML team building a risk model for real estate today and you want to use EO images of any kind, before you even write a line of ML code you have to survive the ceremonies of Discover, Access and Read:

- Discovery is slow: Search across NASA, ESA, Private companies catalogs, public catalogs

- Access is full of diversions and no strict standards: Fill forms for data, draw polygons in a map, get download links via email, every data provider’s "catalog" is different

- Reading is heavy: EO images need specialised tools and boilerplate code to keep the GPU fed fast

As Aravind from Terrawatch rightly said, for most companies using EO, a "hidden product" is just their internal data pipeline. They burn their seed money building custom infrastructure just to do the unsexy work of finding and handling imagery differences.

The sharing dead end

Let's say you survive the ceremony and create great insights from your AI model. How do you share it? PDF reports. BI Dashboards. Maps. Convert images to tables.

You take high-dimensional, computable data and flatten it for human eyeballs.

If you really wish to share it as images, you end up with STAC catalogs. Probably starting the above "ceremonies" again for each end user looking to build on your outputs.

What matters, in the age of Agents

If a human engineer takes weeks to collate and prepare EO datasets, it is impossible for a generic AI Agent.

We are moving to a world of MCP and A2A commerce. If an AI agent is trying to answer a prompt like "estimate my supply chain's carbon emissions" or "should I buy my home here", the AI might think "location data might be insightful for this prompt, let me get it"

Today, that agent hits a wall. It hits a "Contact Sales" form or a "Download" button. Just like a human does.

Be it a satellite manufacturer publishing their images, non-profits distributing deforestation predictions, or high-end labs sharing Geo Foundation Model predictions, all these images need to be easy to find, read and attribute (FAIR).

We believe the Commerical EO industry needs its own protocol for images. A connectivity layer that handles the handshake between a raw S3 bucket and a GPU tensor. Create a better experience for both Humans and AI Agents.

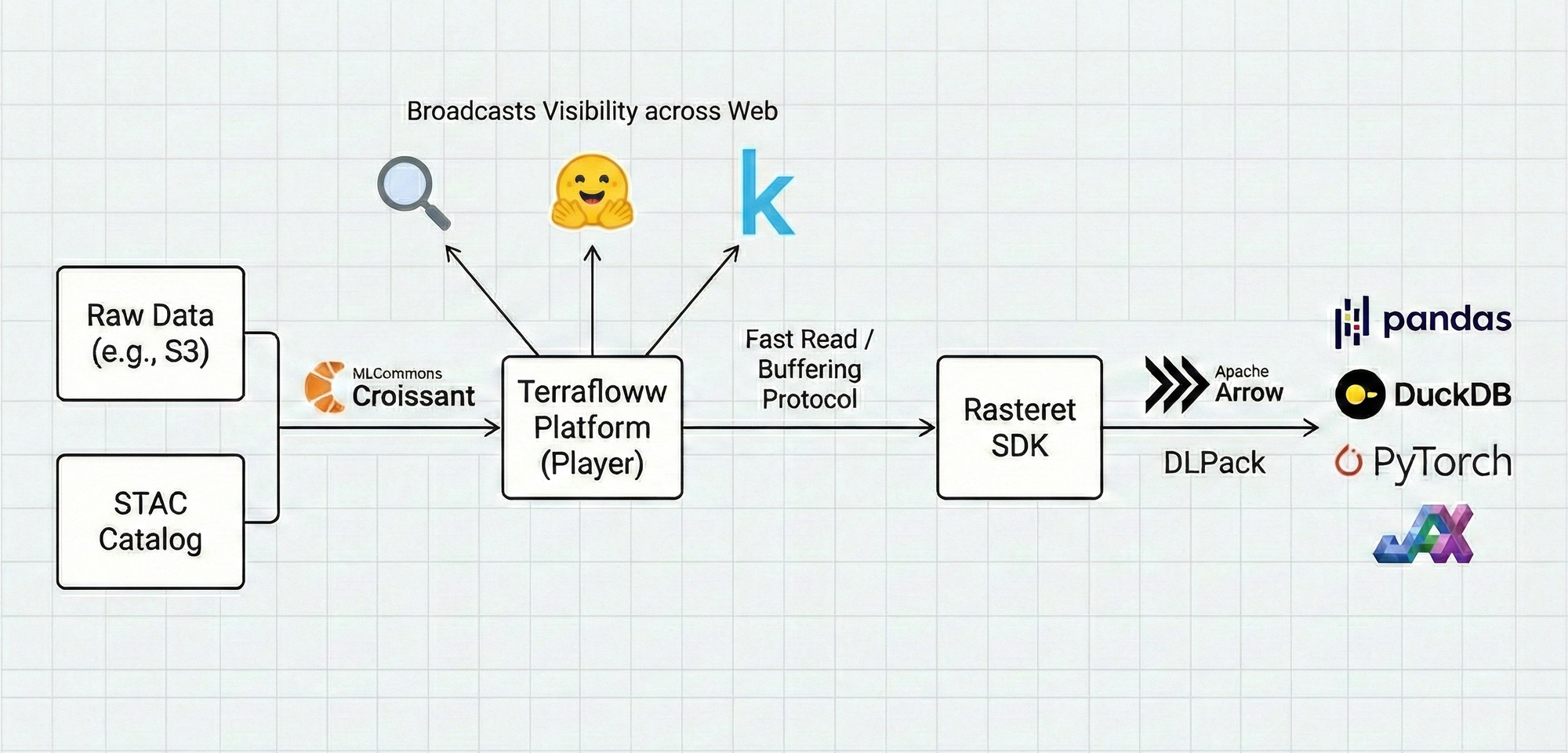

The Solution: Stream Tensors, not Files

Instead of forcing you to download GeoTIFFs, or create a new file format which "shoe-horns" your data by copying images into tables. Rasteret SDK acts as a buffering protocol, while Terrafloww Platform acts as the player.

We are building on the open MLCommons Croissant standard to broadcast your datasets across the web better than STAC catalogs. While our SDK speaks Arrow and DLPack.

So what does this mean for you?

For Data Providers -

- Keep your images in your own S3 bucket, zero copying.

- We index it, you add your attribution and license information

- Increased visibility of your data on the web, with correct metadata

- Get paid for every chip that flows out of your S3

- Set the price for very granular units, not the old "$ per Sqkm"

- Play with Excel-like formulas to tune prices for micro units of data

- Track every byte you shared and the money you earn, on our dashboard

For Data Consumers (Humans/AI Agents) -

- With Rasteret SDK you get -

- Easier data discovery and filtering of datasets

- 10x faster reads of EO images and no boilerplate code to feed your GPUs

- Fully interoperable with PyTorch, JAX, DuckDB and more libraries via Apache Arrow and DLPack

- Track every byte you queried and how much it costs, on our dashboard

Whether an AI Agent reads as little as 5 chips of an EO image or a hedge fund reads images of a whole continent, both providers and consumers can speed up, monitor, and monetize every byte with Terrafloww

This is the foundation needed to make EO data truly ready for agentic- commerce

What if you don't wish to sell data?

All the features listed above remain the same, even for your internal needs.

Filter, discover and accelerate internal analysis on your image datasets with our SDK, and you can monitor and bill internal teams in your enterprise with our console. Skip messing with your data platform, we integrate with you.

Will it devalue my data?

Some might fear that if we make data this easy to mix and match, AI agents will just combine datasets to create something better, lowering the value of the original source.

To that, we say: It's possible, but when friction drops to zero, the volume of innovation skyrockets. We will accelerate towards better data products. We want to see what happens when a group of ML scientists can instantly monetize their inference model without building a sales team, or when a non-profit’s dataset can be effortlessly consumed by a Fortune 500’s AI agent.

Join the Beta

The Defense market has its systems. It’s time the Commercial EO market had its own.

Come test us out, would love your feedback and build this future with you.

If you like to know more, or just wanna chat with us, join our Discord. We will be releasing more updates and blogs soon.