Efficient Cloud-Native Raster Data Access: An Alternative to Rasterio/GDAL

A journey of optimization of cloud-based geospatial data processing.

The rapid growth of Earth observation data in cloud storage, which will continue to grow exponentially, powered by falling rocket launch prices by companies like SpaceX, has pushed us to think of how we access and analyze satellite imagery. With major space agencies like ESA and NASA adopting Cloud-Optimized GeoTIFFs (COGs) as their standard format, we're seeing unprecedented volumes of data becoming available through public cloud buckets.

This accessibility brings new challenges around efficient data access. In this article, we introduce an alternative approach to cloud-based raster data access, building upon the foundational work of GDAL and Rasterio.

The Evolution of Raster Storage

Traditional GeoTIFF files weren't designed with cloud storage in mind. Reading these files often required downloading entire datasets, even when only a small portion was needed.

The introduction of COGs marked a significant shift, enabling efficient partial reads through HTTP range requests.

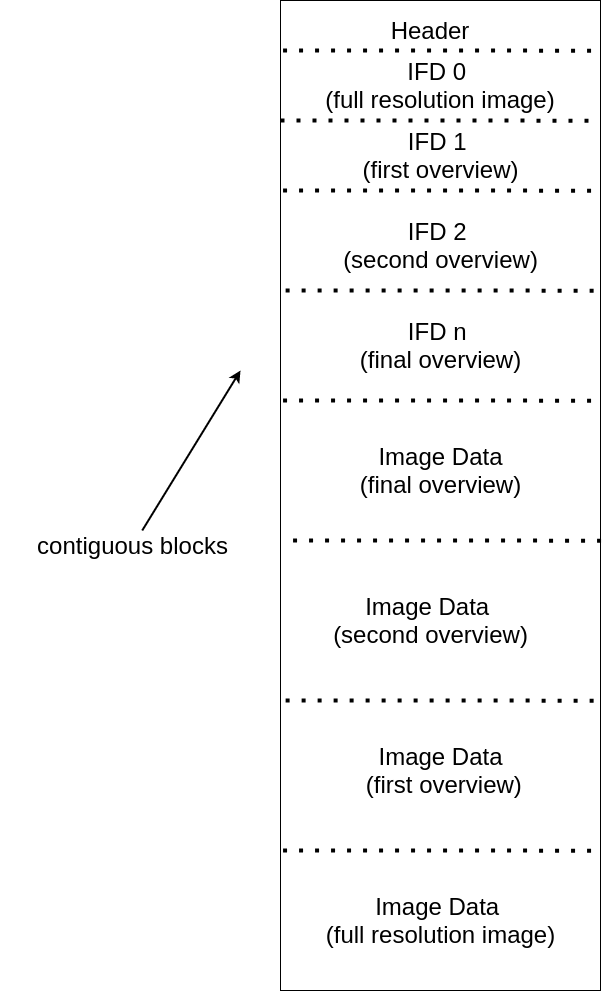

COGs achieve this efficiency through their internal structure:

- An initial header containing the Image File Directory (IFD)

- Tiled organization of the actual image data

- Overview levels for multi-resolution access

- Strategic placement of metadata for minimal initial reads

This structure allows tools to read specific portions of the file without downloading the entire dataset.

However we feel that even with COGs, trying to do something like a Time-series NDVI graph for a few polygons across a few regions is not as fast as it could be, especially due to the latency caused by AWS S3 throttling.

The STAC Ecosystem and GeoParquet

The SpatioTemporal Asset Catalog (STAC) specification has emerged as a crucial tool for discovering and accessing Earth observation data. While STAC APIs provide standardized ways to query satellite imagery, the Cloud Native Geospatial (CNG) community took this further by developing STAC GeoParquet.

STAC GeoParquet leverages Parquet's columnar format to enable efficient querying of STAC metadata. The columnar structure allows for:

- Filter pushdown for spatial and temporal fields

- Efficient compression of repeated values

- Reduced I/O through column pruning

- Fast parallel processing capabilities with the right parquet reading libraries

Current access patterns and challenges

The current approach to accessing COGs, using GDAL and Rasterio, typically involves:

- Initial GET request to read the file header

- Additional requests if needed if header not found in initial request

- Final requests to read the actual data tiles

For cloud-hosted public datasets, this pattern can lead to:

- Multiple HTTP requests per file access

- Increased latency, especially across cloud regions

- Potential throttling on public buckets

- Higher costs from numerous small requests to paid buckets

A New Approach: Extending STAC GeoParquet and Byte-range calculations

Extending stac-geoparquet with new columns

Building upon the excellent stac-geoparquet, we explored adding some of COG’s internal metadata information directly into it.

- Tile offset info

- Tile size info

- Data type

- Compression info

We do a batch process and we pay the cost and time spent to gather COG files metadata upfront.

In our case we took 1 year's worth of Sentinel 2 items from STAC API for this.

Get data from URLs by calculating byte ranges just-in-time

Now that we have 1 year's worth of STAC items in GeoParquet along with each COG's internal metadata. We can do what GDAL does behind the scenes without needing to query the headers of COG files again and again.

We use the info related to tile offsets and tile size info, to calculate the exact byte-range required to be read for each AOI for each COG URL in STAC items.

We then use Python requests module to get the data from the COG URLs, decompress the incoming bytes, since the data resides as deflate compressed COGs, and create the Numpy array.

Below is the current approach to reading a remote COG URL using Rasterio.

# Current approach with GDAL/rasterio requires

# multiple http requests per file behind the scenes

# find scenes from STAC APIs:

import rasterio

from pystac_client import Client

AOI_POLYGON = Polygon([(77.5, 13.0), (77.55, 13.0),

(77.55, 13.05),(77.5, 13.05),(77.5, 13.0)])

client = Client.open("https://earth-search.aws.element84.com/v1")

search = client.search(

collections=["sentinel-2-l2a"],

datetime=f"{start_date.isoformat()}/{end_date.isoformat()}",

intersects=mapping(AOI_POLYGON),

query={"eo:cloud_cover": {"lt": 20}}

)

items = list(search.get_items())

for item in items:

# First request to get IFD and headers of a file

src = rasterio.open(item.assets['red'].href)

# Second or third may happen behind the scene by GDAL

# if headers are not completely read in 1 http request

# once its read, rasterio open dataset is assigned

epsg = item.properties["proj:epsg"]

utm_polygon = wgs84_polygon_to_utm(AOI_POLYGON, epsg)

geojson = utm_polygon.__geo__interface

# using src now, we can ask GDAL to do the byte-range based request

# to get only those internal tiles of COG that cover our AOI

# and it returns a numpy array and its transform

data, transform = rasterio.mask(src, geojson, crop=True)

Current Approach

And below is is our approach.

from pathlib import Path

from shapely.geometry import Polygon

import xarray as xr

from rasteret import Rasteret

from rasteret.constants import DataSources

from rasteret.core.utils import save_per_geometry

def main():

# 1. Setup workspace folder and parameters

workspace_dir = Path.home() / "rasteret_workspace"

workspace_dir.mkdir(exist_ok=True)

# custom name for local STAC collection to be created

custom_name = "bangalore"

date_range = ("2024-01-01", "2024-03-31")

data_source = DataSources.SENTINEL2

# get bounds of polygon above for stac search

bbox = AOI_POLYGON.bounds

# instantiate Rasteret class

processor = Rasteret(

custom_name=custom_name,

data_source=data_source,

output_dir=workspace_dir,

date_range=date_range,

)

# create a local STAC geoparquet with COG metadata added to it

processor.create_collection(

bbox=bbox,

date_range=date_range,

cloud_cover_lt=20,

)

# use the processor to get the image data for polygons and bands

# as a multidimensional xarray dataset

# this uses the local geoparquet created above to optimize HTTP

# requests to multiple COG files (1 request per required tile)

# bands, geometries can be changed here

# date_range too can be changed but must be within the date range

# available in local geoparquet

# pySTAC's "search" filters, like "platform","cloud_cover_lt" and

# others can be passed here too.

xarray_ds = processor.get_xarray(

geometries=[AOI_POLYGON],

bands=["B4", "B8"],

cloud_cover_lt=20,

date_range=date_range,

)

Our New Approach

Performance Insights

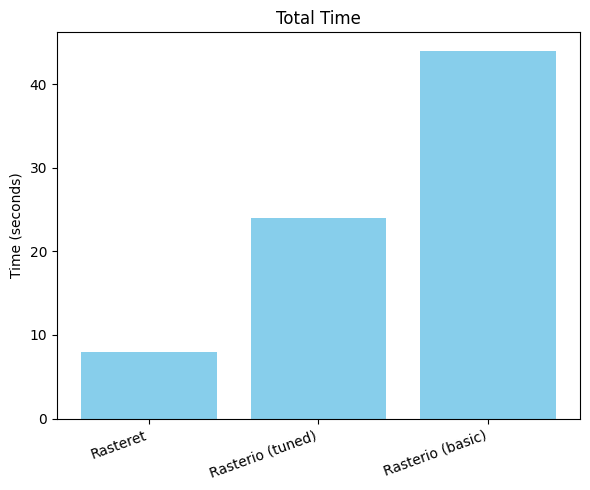

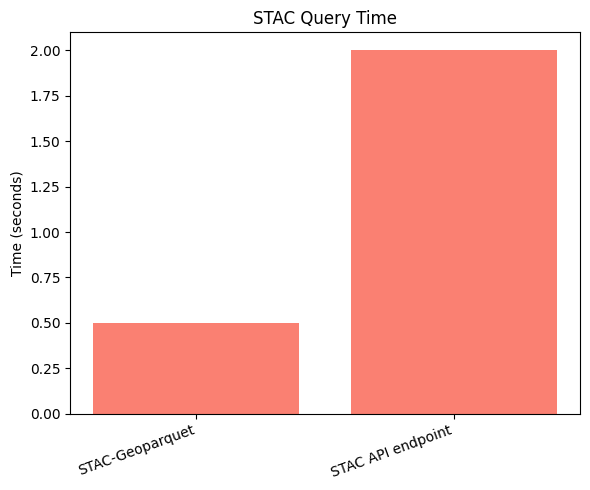

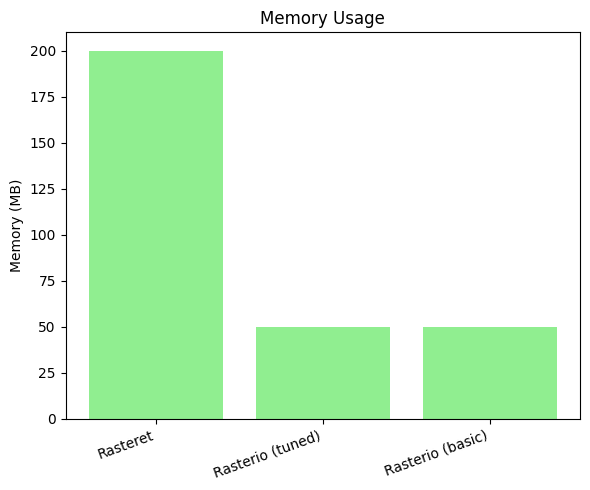

Initial benchmarks show promising results for this approach. The aim was to improve time-to-first-tile.

There are a few things to note before we get to some raster query speed tests -

It is important to tune GDAL configurations correctly to fully benefit from GDAL’s own multi-range requests, and use settings that avoid GDAL listing all S3 files, which wastes time and adds more S3 GET requests.

Rasterio with GDAL’s virtual cache gets faster with subsequent reads to the same COG S3 file, but if the analysis is across time and space, then we pay the latency time to get headers per new file that is not cached.

This is also true across new VMs/Lambdas/Serverless compute, because a new Rasterio "Session/Environment" will always be created for each run of your Python code in a new Python environment, so scaling out to multiple VMs means these new ENVs keep sending HTTP requests to each COG file it interacts with, even if it was used in an earlier Session, to complete the rasterio.mask task.

Our approach to add new columns to STAC GeoParquet avoids paying this time and cost of multiple HTTP requests for each COG file in each new Python process.

We have made a custom python code for byte-range calculation code based on GDAL’s C++ approach. We also created custom tile merging code as well incase 1 AOI intersects with more than 1 internal tile of a COG.

This allows our library to be pretty lightweight and not require any GDAL dependencies, except for the geometry_mask/rasterize functions.

With all this context set, below are some initial test results -

Machine config - 2 CPU (4 threads), 2GB RAM machine

Key Factors to speed up in our approach:

- Reduced HTTP requests through pre-calculating byte ranges just-in-time for each AOI for each COG file

- Pre-cached metadata in STAC-GeoParquet eliminating API calls to STAC API JSON endpoints

- Optimized parallel processing for spatio-temporal analysis

Current Scope and Limitations

While these initial results are encouraging, it's important to note where this approach currently works best:

Optimal Use Cases:

- Time-series analysis of Sentinel 2 data across multiple small polygons

- Optimize paid public bucket data access, to reduce GET requests costs

Areas of improvement:

- Pure Python or Rust implementations of operations like rasterio.mask

- Adding more data sources like USGS Landsat and others

- LRU or other cache for repeated same tile queries

- Reducing memory usage

- Benchmark against Xarray and Dask workloads

- Test on multiple polygons across the world for 1 year date range

What next:

As we continue to develop and refine this approach, we're excited to engage with the geospatial community to gather feedback, insights, and contributions. By collaborating and building upon each other's work, we can collectively push the boundaries of what's possible with cloud-based raster data access and analysis.

We're currently working on an open-source library which will be called “Rasteret” that implements these techniques, and we look forward to sharing the library and more technical details in an upcoming deep dive blog. Stay tuned!

Acknowledgments

This work stands on the shoulders of giants in the open-source geospatial community:

- GDAL and Rasterio, for pioneering geospatial data access

- The Cloud Native Geospatial community for STAC and COG specifications

- PyArrow and GeoArrow for efficient parquet filtering

- The broader open-source geospatial community

We're grateful for the tireless efforts and contributions of these projects and communities. Their dedication, expertise, and willingness to share knowledge have laid the foundation for approaches like the one outlined here.

Terrafloww is proud to support the Cloud Native Geospatial forum by being a newbie startup member in their large established community.